Tasks are predefined stimuli or instructions that you can present to a participant during a recording. By using precise, repeatable tasks, you can ensure data are appropriately comparable across multiple datasets (under different circumstances, for example) and across multiple participants.

In order for the timing of Task stimuli to be synchronized and recorded alongside your Flow data during a data recording, Tasks must be created using a specific framework (and run on the same computer being used to acquire data). You can create your own Tasks using the Kernel Tasks SDK or you can use one of the Kernel-built Unity Tasks.

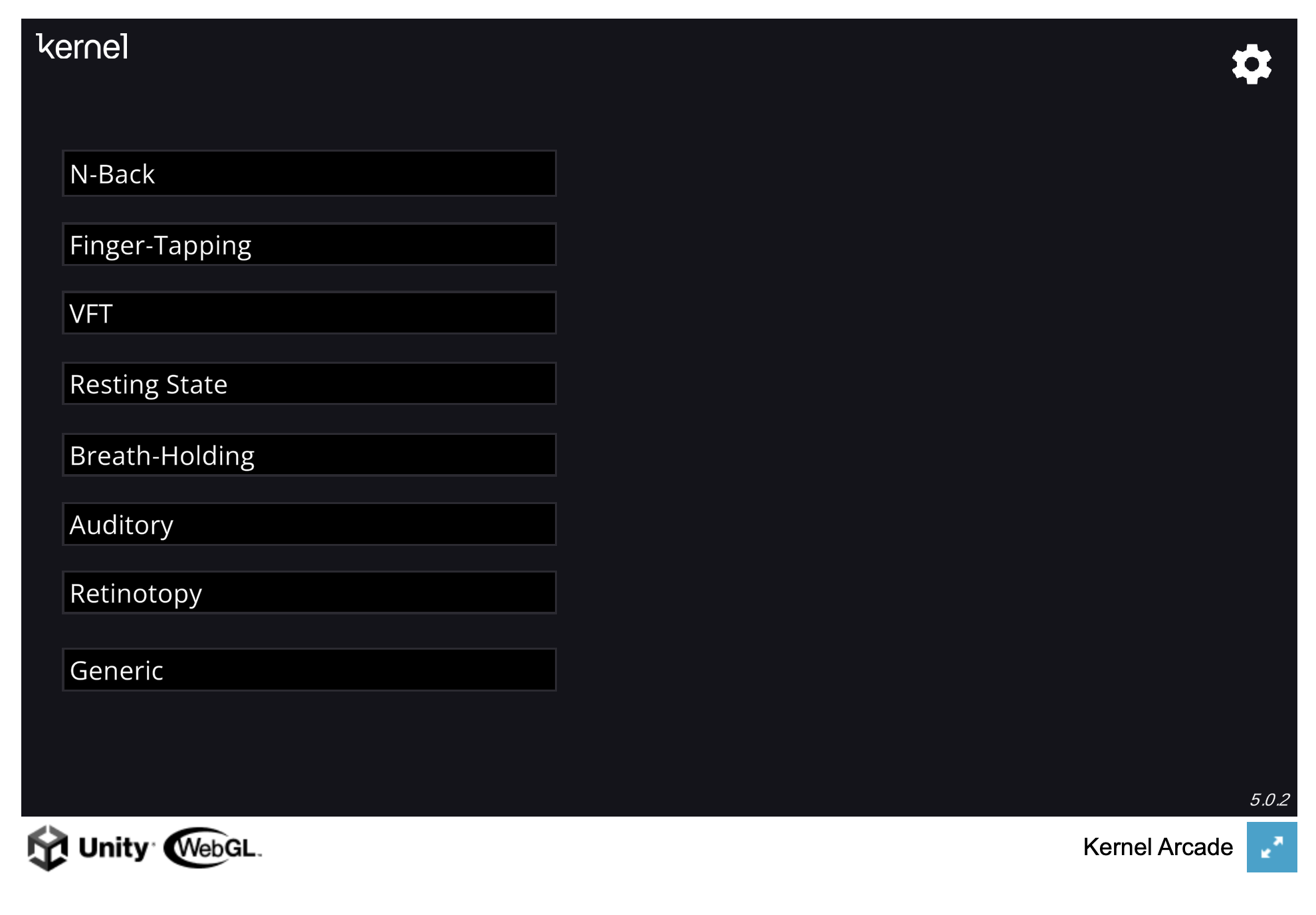

The Tasks available in the Unity menu are described below.

General Physiology tasks:

- Breath-holding: Participant breathes in sync with stimuli presented on the screen. Expect to see changes in oxyhemoglobin and deoxyhemoglobin that are similar across the entire head, and synched to the breathing and breath holding.

Sensory tasks:

- Finger tapping: Participant taps their thumbs to their fingertips as prompted by onscreen stimuli. Expect to see activation in the motor cortex.

- See a Kernel publication with results from the task here.

- Find datasets (includes SNIRF files) of the finger tapping task utilized in the above publication here.

- See an example Jupyter notebook analyzing a SNIRF file from a finger tapping task recording using MNE Python here.

- See an example Jupyter notebook analyzing a SNIRF file from a finger tapping task recording using Cedalion here.

- Auditory: Participant listens to an interleaved series of white noise, clips from Ted talks, and silence. Expect to see activation in the auditory cortex.

- Retinotopy: Participant watches a wedge-shaped checkerboard visual stimulus that slowly rotates around a fixation point, covering all angles of the visual field over time. As the wedge moves, it stimulates different regions of the retina, producing a traveling wave of activity across the visual cortex in a pattern that mirrors the retinotopic organization of the brain. Expect to see activation in the occipital cortex.

Cognitive tasks:

- N-Back: A pattern of numbered playing cards appears onscreen. Participant responds if the current card matches one that appeared a designated number of symbols prior. This task targets working memory systems. We expect to see prefrontal activation. Learn more about n-Back.

- See a Kernel publication with results from the task here.

- Verbal Fluency (VFT): Participants are given a letter (or semantic word category). They are asked to produce as many words as they can that start with the given letter (or belong to the given category) over a 30-60 second period. This process is repeated for different letters and categories.

- See a Kernel publication with results from the task here.

- Resting State: Participants passively watch a 7 minute abstract video, called Inscapes, that is designed to measure brain activity during rest. This task targets the default mode network. Learn more about the origin of Inscapes.

- See a Kernel publication with results from the task here.

Other:

- Generic: In this task, participants are exposed to a predefined sequence of commands presented on the screen. You can input a JSON formatted as an array of blocks, where each block contains specific instructions. This task can be used to create a custom block-design task with experimental and rest periods and will send timestamped events based on the prompts given. Note that the task does not accept user feedback.

To launch a Task from Portal Flow UI:

- In the Data Acquisition Menu, click Launch Tasks.

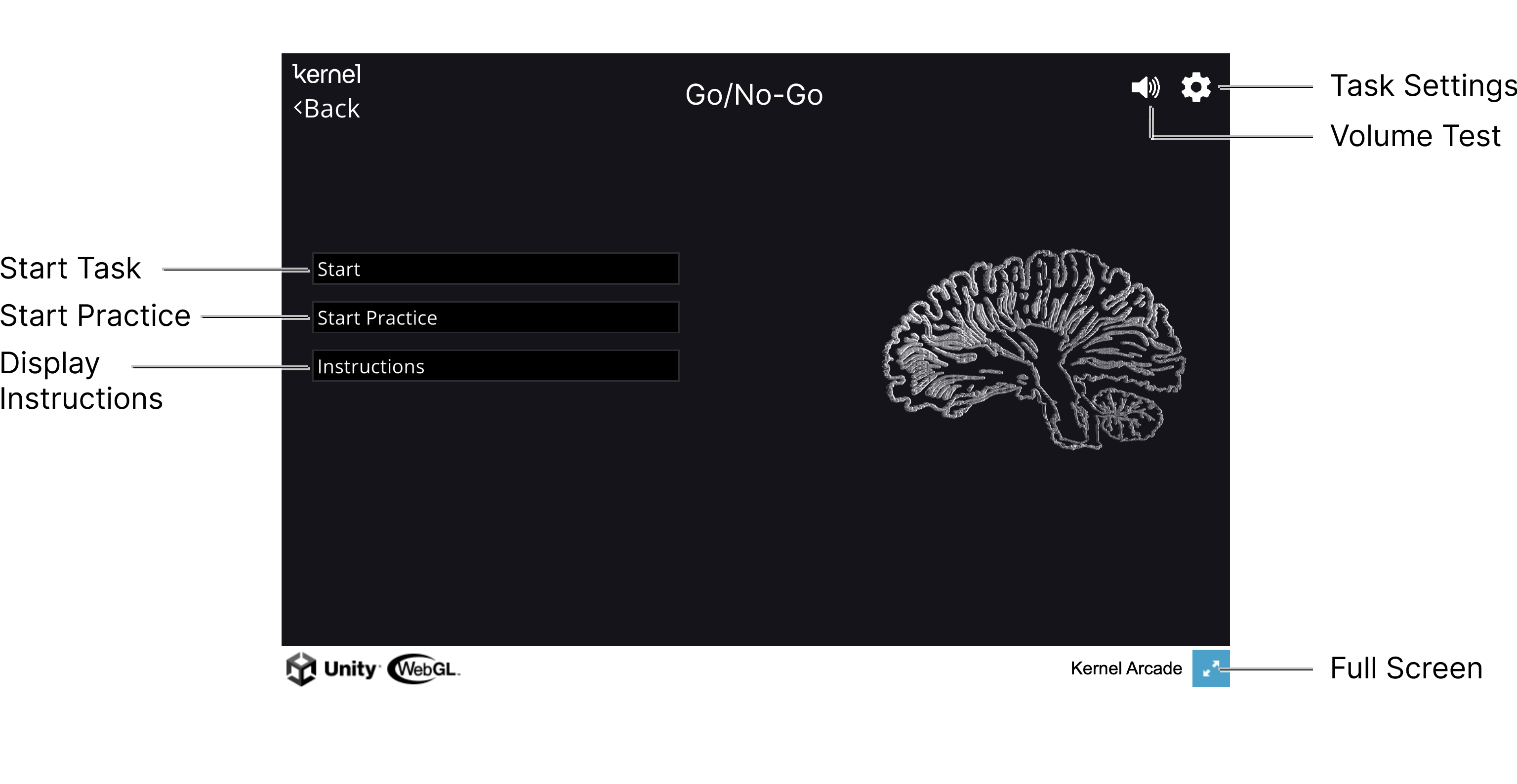

The Unity Task menu opens in a new tab. - Click the Task you would like to run. This will open a Task-specific menu (example below).

- Click the Instructions button to view instructions for the Task.

- Click the Practice button to start a short sample run-through of the Task.

- Practice versions of the Task may give participants additional feedback to help them learn how to do the Task.

- Click the Start button to begin the formal Task.

- Click the Settings icon to edit the parameters of the Task. Each Task has its own set of configurable parameters. These can be modified as needed or left at their default values. See Kernel Unity Task Parameters page for an explanation of all task parameters.

While running the Task, be sure to move the mouse pointer away from any onscreen stimuli to avoid distracting the participant. Also, be careful not to click outside the task window while a Task is running or keyboard inputs will not be logged.

You can also run Tasks from a separate, external device, connected to the data acquisition computer via the Sync Accessory Box. To learn more see Using the Sync Accessory Box.